Many people think that science will eventually be able to explain everything that happens in nature, and that technology will be able to reproduce it. Perhaps that is so, but even then, that day lies far into the future. Probably a more likely scenario is that the further science and technology advance, the deeper the mysteries of the world will grow. Even with topics that we believe science has solved for good, when you take a closer look, you'll find that plenty of problems have slipped through the cracks or been swept under the carpet. Furthermore, these are often the issues that are closest to us and most important in our daily lives. Take hunches or intuitions or premonitions, for example. They may have rational-sounding explanations, but our gut feelings tell us something is not quite right after all. Such examples are not at all uncommon. When you think about it, there are lots of things that modern civilization has forgotten all about. Maybe the time has come to stop for a moment and try to remember. The seeds of forthcoming science and technology are impatiently waiting to be discovered among the things we have left behind.

HORIBA, Ltd.

About half a century ago, I was working in the computer room of a large company. Already back then, most people who were working with computers were interested in AI, but at the time it was just expectations for the future. It was very hard to see what sort of system to use, or how to go about it. From that background, the development in the past few years has truly been amazing.

Simply put, the approach itself has changed. In the past, people would keep searching in logical systems, but no matter how far you pressed the logic, you still got stuck. This has changed from quality to quantity. Today’s AI approaches the goal by dealing with large numbers. Before, it was more of a linguistic-philosophical approach: the relationship between words and objects, whether logic could be adapted to all sorts of targets, and so forth. Many AI researchers were also interested in things like combinatorial techniques. But what they then created out of all of this was full of bugs, and let’s not even talk about the machine translation systems. AI development has been through several impasses already, and the time of the deepest slump was my last experience with AI. It was around the time of the Fifth Generation Project.

Trying to make machines do philosophy didn’t work in the end. The only few vaguely successful results one could get away with mentioning were pet-raising simulation games like Seaman. If the AI doesn’t “guess” correctly to a certain extent, it’s all meaningless, so researchers back then escaped into gray areas where it didn’t really matter if the AI guessed wrong, because in that case humans would step in and interpret the results at their own convenience. It’s just like the cold reading tricks a fraudster uses. If he judges that “this guy is really angry” he responds with a comforting word, without ever asking why the guy is angry.

The breakthrough occurred when they came to the decision that the computer was a kind of brain-like machine. For better or worse, AI turned into something useful. In particular, it became better than humans at playing games like shogi and go. Machines are overwhelmingly powerful at repeating the same procedure over and over again, and they don’t get tired. Moreover, since most modern tools are machines, they are also compatible with AI from the start.

The power of sensors no doubt played a large role in achieving these results. Not logic but sensors. Maybe the biggest reason for the success was the acquisition of something corresponding not to our brains but to our five senses. If you feel pain somewhere in your body, the association is made by repeating the same response, without the brain having to sort it out. This is actually very similar to how humans acquire knowledge. Children fervently learn to speak at an age when their senses are sharp. Perhaps computers now are at the stage of discovering the style of human infants.

Thanks to the establishment of 4K and 8K video technologies, the Japanese broadcaster NHK is digitizing old films once again. The more detailed they get, the more sensuous they get, and you can see things you never saw before. Old films have a huge information value, of course, but also contain enormous quantities of information. Thanks to 4K and 8K digitization, it is now possible to grasp that vast quantity of information in the films. When they were broadcast before, you saw that there seemed to be a guy standing in the pitch dark room; now you realize that there’s actually a whole group of people behind him.

This is truly an expansion of the senses. Suddenly you can feel what you weren’t able to feel before, and capture that sensation just as it is as data. One of the strong areas of AI is colorizing black-and-white films. Moreover, it can finish in a couple of seconds what it would take humans months to do. I was watching a colorized version of a morning TV serial from the mid-1960s called Ohanahan, and the sky was nice and blue and the trees were beautiful in vivid colors. But something still felt wrong. There is a scene where the heroine, played by Fumie Kashiyama, is going to an omiai, a meeting for an arranged marriage, and the colors of her kimono look odd. The kimono is a pale bluish green, while the obi is gold brocade. The season is early summer, so the bluish green looks refreshing, almost like a yukata, but the combination with the glittering gold obi is weird. It is also unnatural for a woman going to an omiai to wear a yukata-like kimono. I guess the reference data were poor.

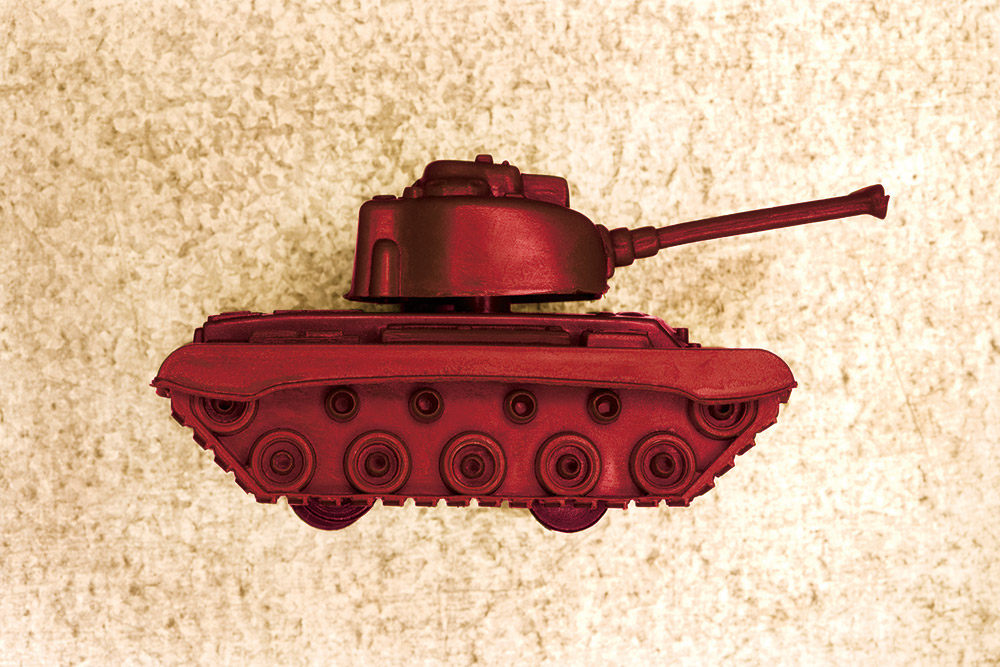

There are more extreme cases too. When an AI colorized a war documentary, the tanks became bright red. For some reason, the AI apparently refused to use camouflage colors or khaki. They tried changing various conditions and adjustments, but the tanks were still red. When they checked the reference data, it turned out that the data for large vehicles was for fire engines. The AI had determined that a tank was a kind of fire engine. Only after inputting several hundred images with tank color information did the tanks start looking the right color. But if the amount of information that the computer has vastly outstrips what humans can overview, there is a possibility that the tanks would remain red. If you don’t input the preferences that humans have cultivated over the years, for example the notion that you wouldn’t wear a yukata to an omiai, there is no way for the AI to know, and it is far from certain that those preferences are input. As AI becomes more powerful through a rapid increase of other kinds of information and experiences but still lacks such “human experience,” it may start gaining another kind of sensibility than human sensibility, and this will lead to conflicts.

Machines will also gradually come to have preferences depending on the bias of their experiences. How do we then reconcile the machine’s preferences with human preferences? We’ll have no choice but to ask the machine. People will have to think about what to do in order to please the machine. Until now, human relationships have been an important theme in the workplace, but from now on the AI relationship will get increasingly important. In order to get the job done successfully, people will have to toe the line and do as the AI says, with its powers vastly superior to humans. If we leave it alone, suddenly it’s the machine who serves first, insisting that “Tanks are red. You are wrong to try to make them camouflage-colored.” Here is the big problem with the Singularity, I think. If the machine is also in charge of tank manufacturing, even actual tanks become red. This is a simplistic and extreme example, but we are already entering a world where such things may very well occur someplace where it’s not immediately obvious.

Some people say that the Singularity is already here. Unless we get used to working with AI and guessing what the AI wants us to do, even human relationships won’t go smoothly. Diversity disappears from the system, as Marshall McLuhan pointed out. The act of pressing the “Like” button becomes a prerequisite, and if you don’t do it a system will be added urging you to press that button no matter what. Such data are picked up behind the scenes. Here too AI is in control. Only ten years or so ago, personal computers were a bit dumb but still easy to handle, but now there are hidden banners all over the place and ads keep popping up. Already from the moment the computer starts up it begins communicating with other machines. It breaks into communication between humans and forces you to do things its way. Your sensibilities and the way you interact with other people also change rapidly. If you don’t enter the “Like” group, it’s as if you were never there at all.

In fact, ever since computers were first invented, many people have predicted that such a day would come. We are moving in line with the predictions, and at a faster rate than expected at that. In the old days, fantasy and science fiction writers would posit an “anti-world,” a different world that was much better than this terrible world, or alternatively another world that was even worse than ours. That is to say, their sensors were already alert and the warning signals would soon start flashing. For my generation, the red lamps have been constantly on in recent years. But for the younger generation, that state of affairs is the new normal, and I think it will be a long time yet before their sensors start flashing red.

Before, our red warning lights weren’t turned on either, whereas for the pre-war generation they already were. The same story repeats itself. In the old days, there was a grace period of two generations and a transmission of oral traditions. Now, it’s less than one generation. The sense of speed solves everything. If we reset it all, the problem ceases to exist and the objectors also vanish. It’s a walkover. The grumblers are gone before the Singularity even begins. Or we might say that the Singularity arrives because there are no grumblers. Once a majority are OK with AI winning, it doesn’t matter whether we can survive the Singularity. In a world where red tanks are fine, the problem disappears by itself.

Soon, there will be no room to keep Japanese traditions alive. Wabi-sabi, for instance, has already become a sales phrase. It’s nothing more than a style. Everything changes in the blink of an eye, and there is no real feel of wabi-sabi. But it is only wabi-sabi if you notice it. It’s the process of things slowly getting older and lonelier, the process of dusk and turning leaves. Without that process, there is neither wabi nor sabi. If you turn the lights on, it’s instantly noon, and if you switch the air conditioner on, it’s instantly summer.

But Japan is also a relatively carefree country. It’s the most placid country in the world regarding the use of technology. In the West, it is a question whether to live from hand to mouth, or to live in peace thanks to technology. If you can endure a bit of poverty, Japan may be a happy place to live in a half-baked sort of way. In that sense, there is still a last hope for Japan. Foreigners used to say we were crazy, but now suddenly they talk about Cool Japan and it’s become the sort of country that gets 30 million tourists. Is inherently hard to get a system going in a loose society like Japan. It’s a strange hybrid kind of country with highly sophisticated technology but where surprisingly slapdash conditions are also tolerated. A few years ago, the Japanese Prime Minister referred to IT as “it.” It’s a lovely country, isn’t it?